Faux-tonomy - the dangers of fake autonomy

The word autonomy is being thrown around these days, often to imply that software is running without human intervention. But it still does not mean software can make decisions outside of the constraints of its own programming. It can not learn what it was not programmed to learn. More importantly, it can not arrive at the conclusion: “no, I do not want to do this anymore”. Something that perhaps should be considered a core part of autonomy.

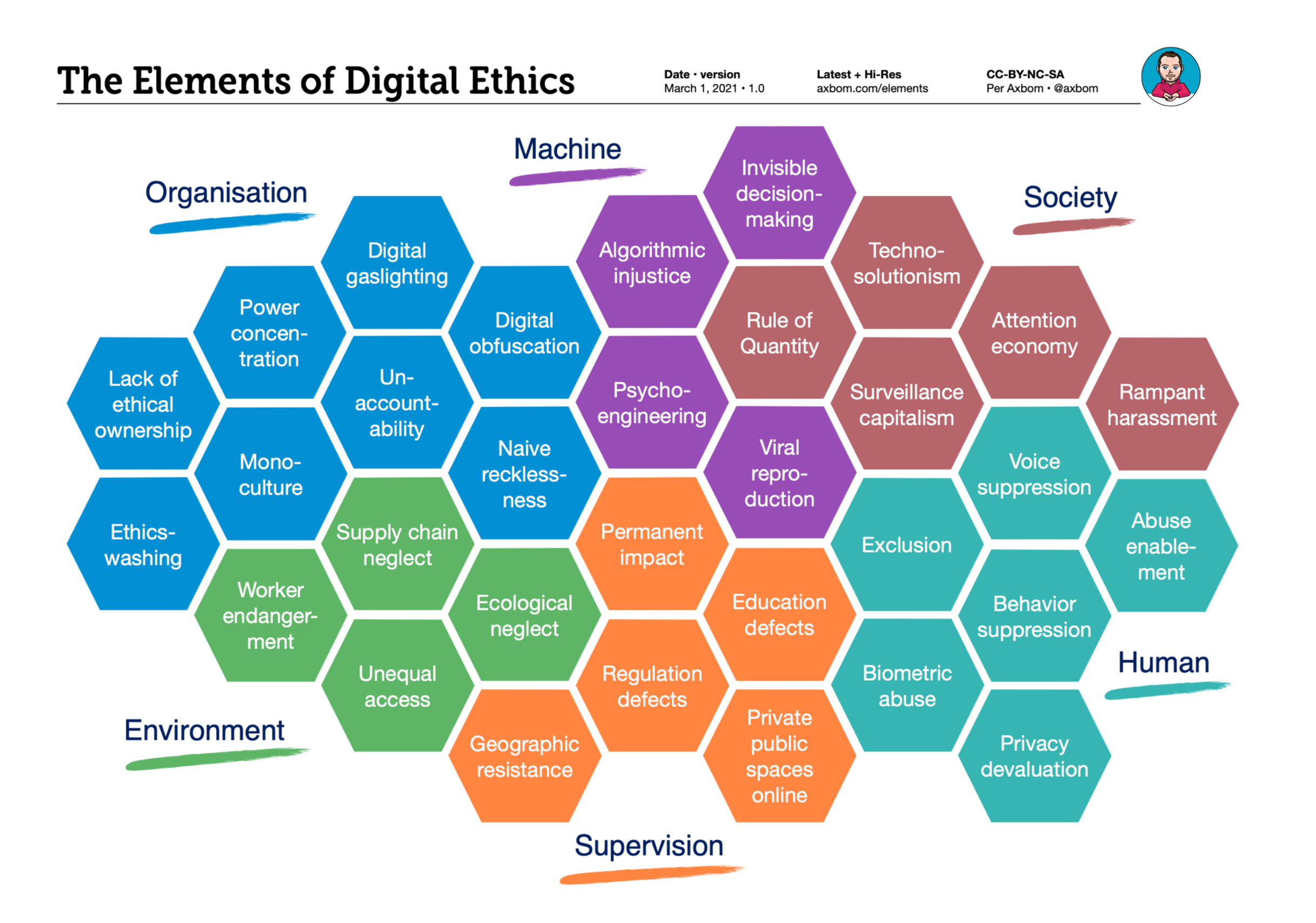

In The Elements of Digital Ethics I refer to autonomous, changing algorithms within the topic of invisible decision-making.

I was called out on this and am on board with the criticism. Our continued use of the word autonomous is misleading and could itself contribute to harm. First, it underpins the illusion of thinking machines - something it is important to remind ourselves that we are not close to achieving. Second, it provides makers with an excuse to avoid accountability.

If we contribute to perpetuating the idea of autonomous machines with free will, we contribute to misleading lawmakers and society at large. More people will believe makers are faultless when the actions of software harm humans, and the duty of enforcing accountability will weaken.

Going forward I will work on shifting vocabulary. For example, I believe faux-tonomy with an added explanation of course) can bring attention to the deceptive nature of autonomy. When talking about learning I will try to emphasise simulated learning. When talking about behavior I will strive to underscore that it is illusory.

I’m sure you will notice I have not addressed the phrase AI. This is itself an ever-changing concept and used carelessly by creators, media and lawmakers alike. We do best when we manage to avoid it altogether, or are very clear on describing what we mean by it.

Your thoughts on this are appreciated.